Lightweight AI for edge computing in space

Benchmarking on Raspberry Pi & Nvidia Jetson Nano for FFT and beyond

At Lightscline, we’re redefining the 1940s principles of scientific computing (Shannon Nyquist theorem) using AI. Lightscline AI’s 4 lines of code reduce 90% of sensor data infra & human costs by training proprietary machine learning models to selectively focus on just 10% of the raw data.

Our selective sensing extends end-to-end in a machine learning deployment scenario - data collection through prediction - using just 4 lines of code. This means that our neural networks give same accuracies while being >10x faster and energy efficient, as they only analyze 10% of the raw data.

Value props for space applications:

In-situ material characterization and onboard anomaly detection/computing is difficult today owing to the extreme SwaP-C requirements for space flight. Lightscline’s selective sensing enable several applications not possible today. Some value props include:

1. >10x reduction in edge computing power than conventional approaches

2. >10x faster for same analytics accuracies

3. 90% reduction in data infra costs

4. 10x productivity increase (data scientists/machine learning/embedded engineers - more deployment)

5. Automatically select the 10% important windows

Use-case:

Comparison of on-board FFT computation time / complexity on Raspberry Pi 3 and Nvidia Jetson Nano – using conventional and Lightscline’s approach.

In this use-case, we compare the on-board FFT computational time and complexity using different edge computing hardware, by using conventional and Lightscline’s approach. We find that Nvidia Jeston Nano is much faster than RPi for FFT computation. We then use Lightscline’s approach to get 10-74x computational time and complexity savings in comparison to multi-layer perceptron (neural networks), which will be needed for analytics on top of FFT. Using just 4 lines of code, Lightscline AI performs end-to-end data collection through prediction with >10x speed and energy efficiency over conventional approaches.

Table 1. shows the experimental parameters for this use-case.

The next two figures show the time for calculating FFT on the Raspberry Pi 3 and Nvidia Jetson Nano. FFT is a commonly used algorithm for scientific computing and spectral analysis.

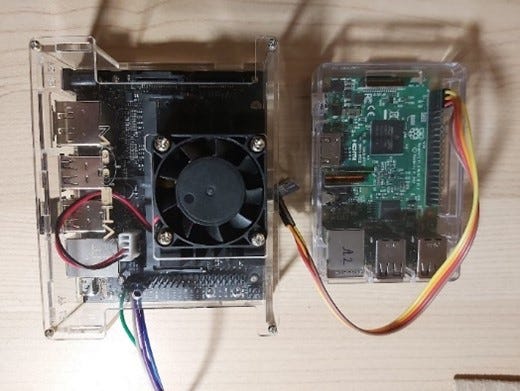

Following is a Nvidia Jetson Nano and a Raspberry Pi 3.

The mean FFT computation time on the RPi 3 was 1.0112 sec with a standard deviation of 1.8319 sec. The mean computation time of NVIDIA Jetson Nano was 0.0518 sec with a standard deviation of 0.0097 sec. Edge computing times are much less in Nano than in RPi. Read more here.

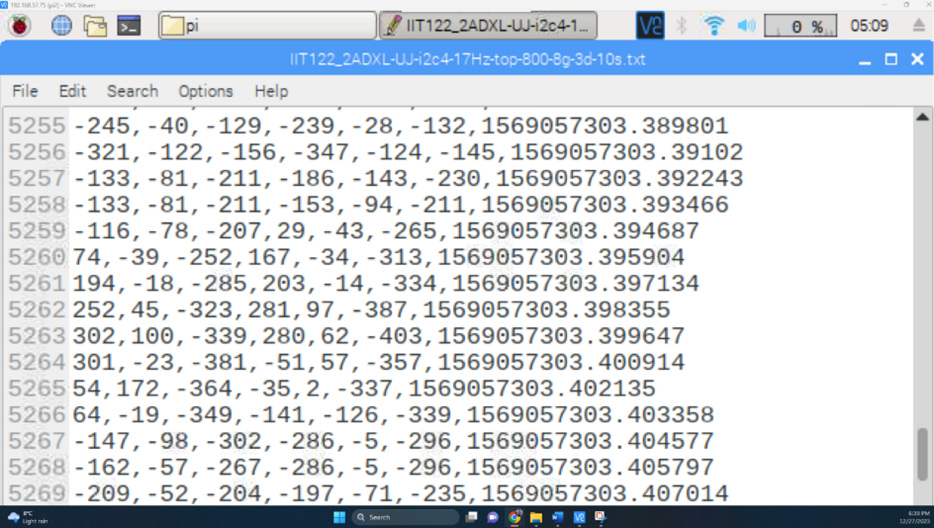

This figure shows some multi-channel data files for onboard processing.

However, the FFT is based on the 1940s Nyquist theorem, which requires collection of all the raw data resolved at Nyquist rates. Lightscline’s AI does not collect all data points, but learns the amount of data needed for different tasks by using a training process. From a user perspective, this all happens using just 4 lines of code, can be setup within 10 minutes, and does not need any external data sharing. Here’s how to get started with Lightscline AI using just 4 lines of code.

Lightscline AI is 10-74x more efficient than a standard neural network while using 100x less data. These tests were conducted on the Case Western Reserve University bearing fault dataset. We observe linear performance improvements like computational complexity in the power, storage, transmission, and latency requirements by using Lightscline AI.

Conclusion

Using just 4 lines of code, Lightscline AI performs end-to-end data collection through prediction with >10x speed and energy efficiency over conventional approaches governed by the Shannon-Nyquist sampling theorem. This leads to orders of magnitude savings in (i) data infrastructure costs and time, and (ii) human resource efficiency. Finally, this enables several new applications not possible today due to extreme SWaP-C requirements of space flight compute. End users can easily validate for themselves using a free trial by going here.